In the fast-evolving world of artificial intelligence (AI), one term has rapidly gained prominence: prompt engineering. As AI systems like GPT-4 are increasingly used across industries, the ability to craft clear, precise prompts has become a vital skill. The effectiveness of AI-driven tasks, from automated content generation to sophisticated data analysis, hinges on how well users communicate with these systems through prompts.

Prompt engineering involves designing inputs that guide AI models toward producing accurate and relevant outputs. A language model interprets natural language prompts, and the importance of structuring these prompts effectively cannot be overstated. Whether you’re asking a generative AI to write an article or summarize data, the quality of the output is directly influenced by the clarity and structure of the prompt. It’s a concept rooted in linguistic clarity and computational precision, and it’s quickly becoming a critical competency for businesses leveraging AI technologies.

This article explores the best practices for prompt engineering, offering comprehensive strategies and insights that cater to both AI beginners and experienced professionals. We will discuss how thoughtful prompt design enhances AI performance and provide real-world applications to demonstrate these principles in action. Whether you’re fine-tuning AI for customer service, content creation, or data processing, mastering prompt engineering will help unlock the full potential of AI systems.

What is Prompt Engineering in Generative AI?

Prompt engineering is the practice of designing and refining prompts to effectively guide AI models, like GPT (Generative Pre-trained Transformer), in generating accurate, useful, and contextually appropriate outputs. This method is essential for maximizing the potential of these models. As AI integrates more deeply into industries such as customer service, content creation, and beyond, the precision with which users communicate with AI through well-crafted prompts significantly impacts the relevance and quality of the results.

The concept of prompt engineering has evolved in parallel with the rise of generative AI, particularly with the development of large language models like GPT-3 and GPT-4. Earlier AI systems required rigid, highly structured inputs. However, as natural language processing (NLP) advanced, these models became capable of handling more conversational and flexible inputs. As AI’s capabilities expanded, the need for more sophisticated prompt engineering techniques emerged, transitioning from basic question-asking to a complex process of guiding AI toward achieving desired outcomes across various applications.

Text-to-image models like DALL-E can generate images based on refined user prompts, emphasizing the importance of modifying prompts to achieve desired results.

For businesses and professionals aiming to leverage AI effectively, mastering prompt engineering is essential. Thoughtfully designed prompts not only lead to more accurate and relevant outputs but also reduce the need for time-consuming corrections. For example, in content creation, clear prompts help AI generate articles that align with the desired tone and structure, while in customer support, well-defined prompts ensure that AI assistants can respond to inquiries with precision. Whether it’s improving decision-making through data analysis or enhancing creative outputs in marketing, prompt engineering enables users to optimize AI performance across numerous domains.

Understanding AI Prompts

Understanding AI prompts is crucial for effective prompt engineering. An AI prompt is a piece of text that is used to elicit a specific response from a generative AI model. It can be a question, a statement, or a command that guides the AI model to generate a desired output. AI prompts can be used in various applications, such as language translation, text summarization, and image generation.

To understand AI prompts, it’s essential to know the different types of prompts that can be used. These include:

-

Zero-shot prompts: These are prompts that are used to elicit a response from an AI model without providing any additional context or information. For example, asking an AI to “translate this sentence into French” without any prior examples.

-

Few-shot prompts: These are prompts that are used to elicit a response from an AI model by providing a few examples of the desired output. For instance, showing the AI a couple of translated sentences before asking it to translate a new one.

-

Chain-of-thought prompts: These are prompts that are used to elicit a response from an AI model by providing a step-by-step guide on how to generate the desired output. This method is particularly useful for complex tasks that require detailed reasoning.

By understanding these different types of prompts, you can optimize your interactions with generative AI models. Knowing when and how to use each type can significantly enhance the quality of the AI’s responses, making your prompt engineering skills more effective.

Developing Prompt Engineering Skills

Developing prompt engineering skills is essential for anyone who wants to work with generative AI models. Prompt engineering is the process of designing and optimizing prompts to get a desired response from a generative AI model. It requires a deep understanding of the AI model, the task at hand, and the desired output.

To develop prompt engineering skills, you need to have a good understanding of the following:

-

Generative AI models: You need to know how generative AI models work and how they can be used to generate different types of output. Familiarize yourself with models like GPT-3 and GPT-4, and understand their capabilities and limitations.

-

Prompting techniques: You need to know the different prompting techniques that can be used to elicit a response from a generative AI model. Techniques such as zero-shot, few-shot, and chain-of-thought prompting are essential tools in your arsenal.

-

Complex tasks: You need to know how to break down complex tasks into simpler tasks that can be handled by a generative AI model. This involves understanding the task’s requirements and structuring your prompts to guide the AI effectively.

-

Few-shot prompting: You need to know how to use few-shot prompting to elicit a response from a generative AI model. Providing a few examples can help the AI understand the desired output more clearly.

-

Prompt engineering guide: You need to have a guide on how to design and optimize prompts to get a desired response from a generative AI model. This guide should include best practices, common pitfalls, and strategies for refining prompts.

By developing these skills, you can optimize your prompts to get the desired response from a generative AI model. This can help you achieve your goals and objectives more efficiently and effectively.

Content Creation

Digital marketing teams utilize prompt engineering to generate blog posts, social media content, and product descriptions that are tailored to their audience’s preferences. Refined prompts help businesses produce targeted, engaging content more efficiently. Additionally, image prompting techniques are used to optimize prompts for text-to-image models like DALLE and Stable Diffusion to generate high-quality images from textual descriptions.

-

Customer Support: AI chatbots and virtual assistants rely on prompt engineering to deliver precise answers to customer queries. For instance, asking an AI assistant to provide troubleshooting steps for a particular device involves crafting a prompt with clear context and specific instructions to avoid ambiguous responses.

-

Data Analysis: In fields like finance and healthcare, data scientists employ AI to analyze large datasets. With well-structured prompts, they can direct AI to produce insightful summaries, forecasts, or actionable recommendations, thereby improving decision-making processes.

Prompt engineering is more than just asking questions—it’s about crafting effective communication with AI models to achieve specific, high-quality outputs across various industries. By understanding the intricacies of prompt design, professionals can fully harness the power of AI to enhance business operations and decision-making.

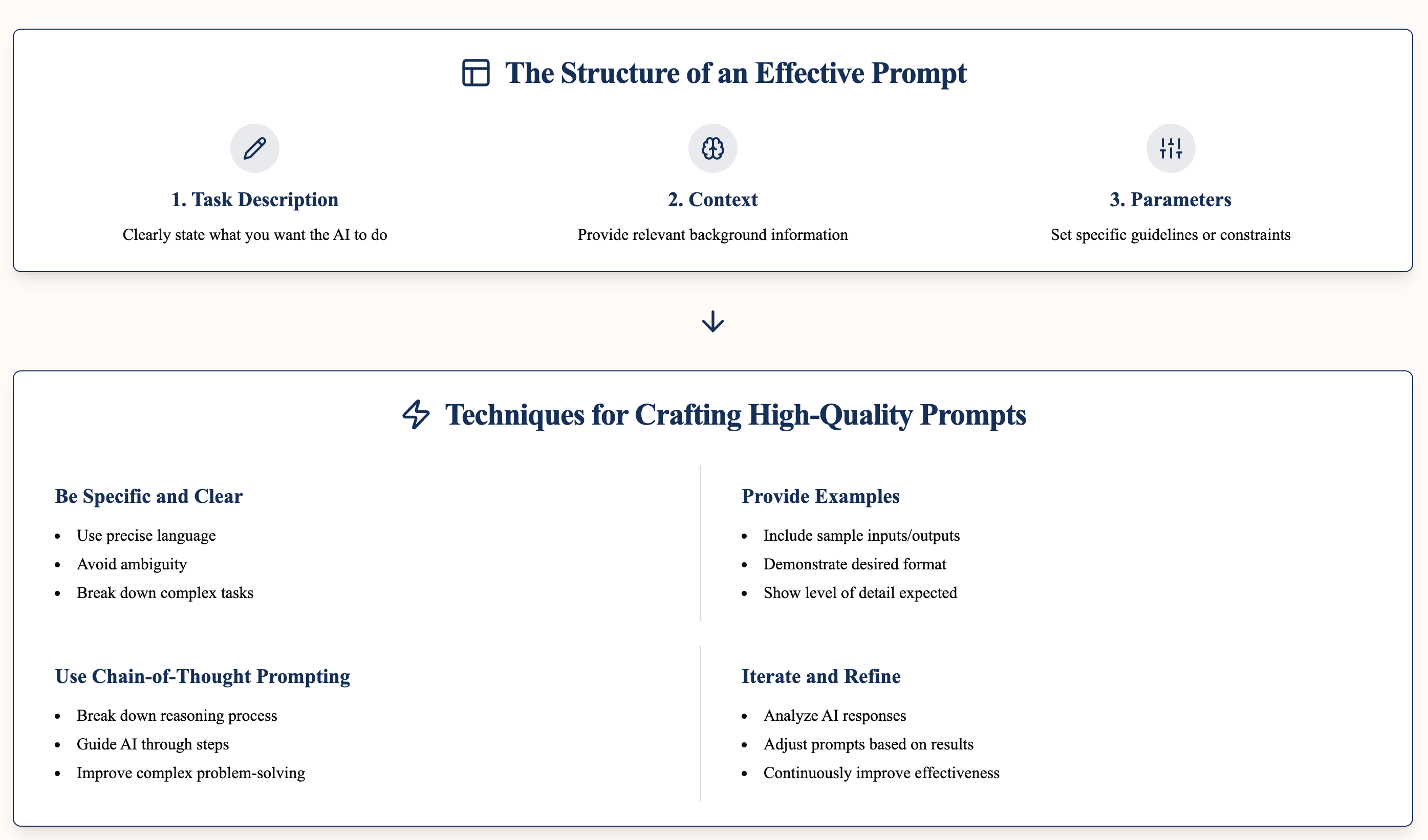

The Structure of an Effective Prompt

The design of an effective prompt is crucial for directing AI models to produce accurate and high-quality results. While the complexity of prompts can vary, a well-structured prompt typically includes four key components: context, task, requirements, and instructions. When these elements are properly defined, they greatly enhance the AI's ability to understand and respond effectively to user queries.

1. Context

Context provides the background information necessary for the AI to generate relevant responses. Supplying adequate situational details ensures the AI can frame its response within the desired domain. For instance, specifying whether a technical explanation or a customer-friendly response is needed can drastically alter the AI's output.

Example: “If you're writing a product description for a fitness gadget, you could include the product's purpose, the target audience, and its key features as part of the context.“

2. Task

The task defines the specific objective of the prompt. Clearly stating the task helps prevent vague or generic responses and prompts more focused outputs.

Example: “Write a compelling product description” precisely defines the task, while simply asking for information about the product could result in a more general and less specific response.“

3. Requirements

Requirements provide any specific constraints or guidelines the AI must follow to ensure the response meets expectations. This could include word count limits, tone specifications, or particular information that must be highlighted.

Example: “The description should be under 150 words, written in an enthusiastic tone, and highlight the product's waterproof feature.“

4. Instruction

Instruction is a crucial aspect of prompt engineering. It involves providing clear and concise instructions to a generative AI model on what to do and how to do it. The instructions should be specific, measurable, achievable, relevant, and time-bound (SMART).

To provide effective instructions, you need to have a good understanding of the following:

-

AI model: You need to know how the AI model works and what it can do. Understanding the model’s strengths and limitations will help you craft instructions that it can follow accurately.

-

Task: You need to know what task you want the AI model to perform. Clearly defining the task helps prevent ambiguity and ensures the AI understands what is expected.

-

Desired output: You need to know what output you want the AI model to generate. Being specific about the desired outcome helps the AI produce more relevant and accurate results.

-

Complex reasoning: You need to know how to break down complex tasks into simpler tasks that can be handled by the AI model. This involves structuring your instructions in a way that guides the AI through each step of the process.

-

Domain knowledge: You need to have domain knowledge on the task at hand and the desired output. This helps you provide context and details that the AI needs to generate high-quality responses.

By providing effective instructions, you can optimize your prompts to get the desired response from a generative AI model. This can help you achieve your goals and objectives more efficiently and effectively.

4. Instructionn

Clear instructions guide the AI on how to structure the response. This might involve step-by-step directions or examples to help the AI achieve a specific style or content format.

Example: Begin with an engaging sentence about how this product enhances daily workouts, list three key features, and conclude with a call to action.

Practical Examples

- Good Prompt Example: “You are a marketing writer tasked with creating a product description for a new waterproof fitness tracker. The target audience is active individuals aged 25–40 who enjoy outdoor activities. Write a description under 150 words in an enthusiastic tone that highlights the product's waterproof feature, long battery life, and sleek design. End with a call to action encouraging readers to purchase the tracker for their next adventure.”

- Bad Prompt Example: “Write a description of a fitness tracker.”

How These Components Work Together in Large Language Models

When effectively combined, these four elements—context, task, requirements, and instructions—provide a structured framework that guides the AI toward generating the desired output. By defining the target audience, tone, and critical product features, for instance, the AI is equipped to create a description that not only meets the content requirements but resonates with the intended audience.

Detailed descriptions in prompts can guide AI to generate images that are realistic, artistic, or abstract, showcasing the importance of detailed descriptions.

This structured approach enables the flexibility to craft prompts for a variety of tasks, from generating creative content to analyzing data. By adhering to these principles, prompt engineers can consistently generate more accurate and relevant outputs, thereby improving the effectiveness of AI interactions across different applications.

Techniques for Crafting High-Quality Prompts

Crafting high-quality prompts is an essential part of working effectively with AI models. By fine-tuning the way we ask questions, we can dramatically improve the quality of AI responses, making the process more efficient and productive. This section explores some key techniques for creating high-quality prompts that yield accurate, coherent, and useful results.

1. Use of Natural Language

One of the most important aspects of crafting effective prompts is using natural, conversational language. Natural language allows AI systems to better grasp the context and produce responses that are more coherent and relevant. Avoiding overly technical jargon or ambiguous phrasing reduces the likelihood of misunderstandings or irrelevant answers. Instead, focusing on clarity and specificity helps ensure the AI fully understands the prompt's intent. This, in turn, improves the quality of the output and decreases the need for multiple attempts to get the desired result.

Example: Rather than saying "Summarize," a clearer approach would be to ask, "Can you summarize this article in simple terms for a beginner?" This ensures the AI provides a response that is better suited for the intended audience.

2. Iterative Refinement

Crafting effective prompts is often an iterative process. The initial outputs might need adjustments before they meet the desired expectations. By carefully reviewing the AI's responses and making iterative refinements—whether through adding more context, simplifying the prompt, or clarifying specific requirements—you can guide the AI toward generating more precise and relevant outputs. This process helps achieve a balance between prompt complexity and the quality of the AI's output, leading to more targeted and useful results.

Example: If the AI produces a general summary, you can refine the prompt by specifying, "Include key points about the AI technology mentioned," to make the output more focused and informative.

3. Avoiding Common Pitfalls

When crafting prompts, there are common pitfalls that can lead to subpar results. One frequent issue is being too vague, which forces the AI to guess the user's intent, often resulting in irrelevant or incomplete answers. On the other hand, making the prompt overly complex can confuse the AI, producing outputs that are unclear or convoluted. Another mistake is assuming the AI has background knowledge of the topic, which can create gaps in its response.

Tip: Always provide sufficient context and ensure the task is clearly defined, without burdening the prompt with excessive or unnecessary details that might overwhelm the system.

For instance, in a marketing scenario, a company initially asked AI to "generate an ad for our new product." The result was a generic advertisement that lacked a strong call to action. However, by refining the prompt to include more details such as the target audience, key product benefits, and the desired tone, the AI produced a much more specific and compelling ad. The improved prompt read: "Write a short ad for our new eco-friendly water bottle, targeting environmentally conscious consumers. The ad should highlight sustainability, durability, and include a strong call to action encouraging customers to buy now."

Starting with a general version of the prompt and analyzing the AI's output allows for adjustments in language, instructions, or structure to achieve greater clarity and relevance. Testing prompts on smaller tasks before deploying them in more complex scenarios is a valuable strategy to ensure they are effective and tailored to the specific use case. Through the application of these techniques, users can create high-quality prompts that yield better results from AI systems.

Advanced Strategies for Experienced Prompt Engineers

For seasoned prompt engineers, moving beyond basic techniques and exploring more advanced strategies can significantly enhance the effectiveness of AI models. This section delves into some of the most sophisticated approaches, including few-shot learning, contextual layering, and prompt chaining, offering experienced professionals the tools to further refine their AI interactions.

Few-shot Learning

Few-shot learning is a technique where AI models are provided with a minimal number of examples to understand and perform a task. In the context of prompt engineering, this involves embedding a small set of examples within the prompt to help the AI respond accurately to new requests without being overwhelmed by excessive data. This approach is particularly beneficial when training on large datasets is impractical or unnecessary for the task at hand.

Example: If you're asking AI to summarize articles, including a couple of examples of well-summarized pieces in the prompt can guide the model in generating similar, high-quality summaries for new articles.

Contextual Layering

Contextual layering is about structuring prompts to build the AI's understanding gradually. Rather than providing all information upfront, layering allows the AI to process information step by step, guiding it through complex tasks. This is especially useful for multi-step processes or tasks that require precise handling of nuanced information.

Example: In legal technology, you could first ask the AI to review a specific legal case. Later, you can provide additional instructions, such as extracting key legal principles, ensuring that the AI builds on its previous responses and provides deeper analysis with each step.

Prompt Chaining

Prompt chaining is a method where multiple prompts are linked together to break down complex tasks into smaller, more manageable parts. This approach is effective for workflows that involve several stages of reasoning or tasks that span across different domains. Each prompt builds upon the results of the previous one, helping the AI process information incrementally and produce more refined outputs.

Example: In a healthcare scenario, you might start by asking AI to gather patient history, then move on to analyzing symptoms, and finally request a diagnosis based on the information collected in the previous steps. This step-by-step approach allows AI to handle complex decision-making processes with greater accuracy and organization.

Advanced Use Cases for Complex Tasks

For those who are experienced in prompt engineering, advanced techniques such as few-shot learning, contextual layering, and prompt chaining open up new possibilities in specialized fields. These strategies can be applied in several sectors, including:

-

Legal Tech AI can assist with tasks such as case law research, contract drafting, and litigation analysis by combining contextual layering and prompt chaining to provide deeper insights into legal precedents and strategies.

-

Healthcare By employing prompt chaining, AI can offer step-by-step diagnosis support, check for drug interactions, and even generate personalized treatment plans.

-

Data-Driven Decision Making In sectors like finance and marketing, combining few-shot learning with contextual layering enables AI to produce detailed reports or predictive models based on minimal, but strategically provided, data.

These advanced strategies—few-shot learning, contextual layering, and prompt chaining—represent the next stage of prompt engineering. They enable more sophisticated interactions with AI, allowing for complex workflows and highly tailored outputs. By applying these techniques, prompt engineers can unlock new levels of efficiency and precision, particularly in fields that demand detailed, multi-step processes and deeper contextual understanding.

Benefits of Effective Prompt Engineering

Effective prompt engineering can have numerous benefits, including:

-

Improved model performance: Effective prompt engineering can help to improve the performance of a generative AI model by providing clear and concise instructions on what to do and how to do it. This leads to more accurate and relevant outputs.

-

Increased efficiency: Effective prompt engineering can help to increase efficiency by reducing the time and effort required to generate a desired output. Well-crafted prompts minimize the need for multiple iterations and corrections.

-

Enhanced creativity: Effective prompt engineering can help to enhance creativity by providing a clear and concise guide on how to generate a desired output. This allows the AI to explore creative solutions within the defined parameters.

-

Better decision-making: Effective prompt engineering can help to improve decision-making by providing a clear and concise guide on how to generate a desired output. This ensures that the AI’s responses are aligned with the decision-making criteria and objectives.

By developing effective prompt engineering skills, you can optimize your prompts to get the desired response from a generative AI model. This can help you achieve your goals and objectives more efficiently and effectively.

Ethical Considerations in Prompt Engineering

Ethical concerns play a significant role in prompt engineering, with one of the primary issues being the risk of introducing bias into AI outputs. AI models are trained on large datasets that may contain biases related to race, gender, or socio-economic factors. It's essential for prompt engineers to be mindful of these biases and take active steps to minimize their impact on AI responses. By crafting neutral prompts and avoiding language that could perpetuate harmful stereotypes, prompt engineers can promote fairness and inclusivity in AI-generated content. When designing a prompt for a hiring assistant tool, the engineer should steer clear of gendered language or any emphasis on traits that could reinforce stereotypes, thereby promoting a more equitable AI-driven hiring process.

Transparency in Prompt Design

Maintaining transparency in how prompts are created and refined is crucial for building trust in AI outputs. Users need to understand the rationale behind prompt design, including any assumptions or limitations embedded within. This is particularly important in sensitive sectors like healthcare, law, or finance, where AI-generated outputs can have a direct impact on people's lives. Providing clear documentation of the prompt engineering process helps ensure accountability and allows stakeholders to evaluate and improve ethical standards.

Example: In the legal sector, prompts designed for contract analysis should clearly outline tasks, such as flagging ambiguous clauses or highlighting missing terms. Transparency in how these prompts are developed ensures that clients know the AI operates within ethical boundaries.

Responsible AI Use

Prompt engineers also have the responsibility to ensure AI systems are used ethically, especially in areas like customer service, content moderation, and healthcare. Responsible use of AI involves being aware of potential risks, such as the unintended generation of misleading or harmful information. By incorporating safeguards into prompts—such as ensuring factual accuracy or addressing sensitive topics carefully—prompt engineers can mitigate these risks.

Example: When crafting prompts for customer support AI, a prompt engineer should include instructions that highlight empathy and understanding, preventing the AI from offering dismissive or inappropriate responses to users dealing with sensitive issues, such as mental health concerns.

Strategies for Minimizing Ethical Risks

Prompt engineers can adopt several strategies to reduce ethical risks:

-

Inclusive Language Use neutral and inclusive language in prompts to avoid reinforcing stereotypes or biases.

-

Iterative Testing Continuously test AI outputs across different use cases to identify unintended biases and refine prompts accordingly.

-

Auditing Regularly audit AI outputs for bias and fairness, ensuring that different demographic groups are not disproportionately affected by the same prompt.

-

User Control Allow users to review and modify prompts, particularly in applications that involve personal judgment or decision-making, to ensure transparency and adaptability.

Ethical considerations are essential to the responsible application of AI in modern industries. Prompt engineers must remain vigilant in creating prompts that promote fairness, transparency, and accountability. By incorporating these practices, AI systems can become more trustworthy, equitable, and effective across various applications.

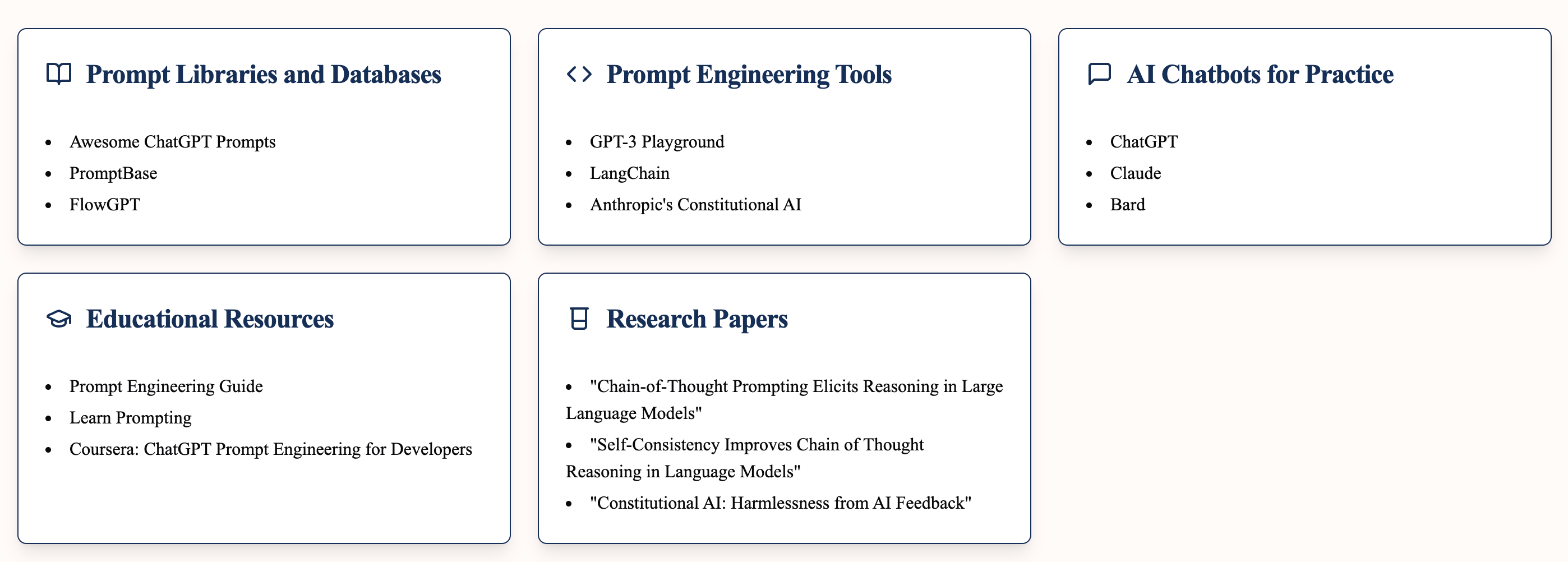

Tools and Resources to Improve Prompt Engineering

To succeed in prompt engineering, it is essential to leverage the right tools and resources that enhance the quality and effectiveness of prompts. Many platforms are available to assist engineers in refining prompts, providing real-time feedback, and optimizing outputs. These tools offer interactive interfaces, testing environments, and performance analysis to help users fine-tune their inputs. Below are some of the most valuable tools and resources available for improving prompt engineering.

1. Interactive Platforms for Prompt Testing

Interactive platforms allow prompt engineers to experiment with different structures and inputs to generate the desired results. These tools provide real-time feedback, enabling users to test and refine prompts in a sandbox environment. Some notable examples include:

-

OpenAI Playground: This platform lets users interact with various AI models, including GPT-3 and GPT-4, to explore different prompt formulations. It offers immediate feedback on AI outputs, allowing engineers to test prompts under different configurations and analyze how the AI responds to specific inputs. OpenAI Playground is widely used for rapid experimentation and prototyping of prompts.

-

GPT-4 Sandbox: This tool allows users to experiment with GPT-4 in a user-friendly environment, adjusting parameters like temperature (controls creativity; low values make responses more focused and deterministic, while high values increase randomness and creativity) and max tokens (limits the length of the response; higher values allow for longer outputs, while lower values shorten it) to influence the AI's output. It's particularly useful for those who are new to prompt engineering and want to explore how slight changes in prompts can yield different results.

-

Hugging Face's Inference API: This platform provides access to multiple AI models, including GPT, T5, and BERT, for generating text, summarizing documents, and more. Hugging Face is ideal for engineers looking to experiment with various models in different domains, from translation to summarization, while fine-tuning prompts in real time.

2. Testing and Validation Platforms

In addition to interactive tools, dedicated platforms focus on testing and validating the effectiveness of prompts across various AI models. These platforms ensure that the generated outputs are consistent, relevant, and aligned with the desired outcomes:

-

TrueFoundry: TrueFoundry is a robust platform for real-time monitoring and analysis of AI systems. It allows engineers to track performance metrics such as accuracy, coherence, and relevance. The platform helps in identifying weaknesses in prompt design, ensuring that engineers can adjust and refine prompts for more consistent outputs. Testing prompts across multiple systems with TrueFoundry helps reduce the risk of errors and inconsistencies.

-

Lettria: A platform focused on natural language processing (NLP) tasks, Lettria offers tools to optimize prompt performance. Engineers can use Lettria to improve the structure and clarity of prompts, especially when working on tasks like text generation, summarization, and sentiment analysis.

3. Collaborative Learning Communities

Collaborative learning communities are essential for developing prompt engineering skills. These communities provide a platform for individuals to share their knowledge and experiences on prompt engineering.

To develop a collaborative learning community, you need to have a good understanding of the following:

-

Generative AI models: You need to know how generative AI models work and how they can be used to generate different types of output. Sharing insights on model capabilities and limitations can help the community learn and grow.

-

Prompting techniques: You need to know the different prompting techniques that can be used to elicit a response from a generative AI model. Discussing various techniques and their applications can lead to innovative approaches.

-

Complex tasks: You need to know how to break down complex tasks into simpler tasks that can be handled by a generative AI model. Collaborative problem-solving can help tackle challenging tasks more effectively.

-

Few-shot prompting: You need to know how to use few-shot prompting to elicit a response from a generative AI model. Sharing examples and case studies can illustrate the effectiveness of this technique.

-

Prompt engineering guide: You need to have a guide on how to design and optimize prompts to get a desired response from a generative AI model. A shared guide can serve as a valuable resource for the community.

By developing a collaborative learning community, you can share your knowledge and experiences on prompt engineering with others. This can help to improve your prompt engineering skills and achieve your goals and objectives more efficiently and effectively.

3. Collaborative Learning Communities**

Learning from a community of engineers and researchers is essential for staying updated on the latest techniques and strategies in prompt engineering. Online communities not only provide resources and case studies but also foster collaboration and idea-sharing:

-

Learn Prompting: Learn Prompting offers a structured curriculum designed to help both beginners and advanced users master prompt engineering. With tutorials, case studies, and practical exercises, it is an invaluable resource for engineers looking to refine their skills and adopt advanced techniques such as few-shot learning and contextual layering.

-

Reddit's Prompt Engineering Forum: This community is an excellent platform for prompt engineers to discuss strategies, share success stories, and troubleshoot issues. The forum is highly active, offering insights into new developments and prompt engineering techniques. It's an ideal space to collaborate with peers, exchange tips, and find inspiration for new prompt ideas.

4. Open-Source Libraries and Documentation

For engineers who want to dive deeper into AI frameworks, open-source libraries provide the flexibility to experiment directly with models and develop more sophisticated prompts:

-

GPT-Neo and T5 Transformers: These open-source models allow prompt engineers to explore AI frameworks without the constraints of proprietary tools. By working with GPT-Neo, T5, and other transformer models, engineers can develop custom solutions tailored to specific tasks, such as document summarization, question-answering, or language translation.

-

Hugging Face Library: Hugging Face's open-source library is a go-to resource for engineers wanting to experiment with a variety of transformer models. The library includes extensive documentation, allowing users to explore different model architectures and improve their prompt-writing techniques.

Continuous Learning and Educational Platforms for Prompt Engineering Skills

Prompt engineering is an evolving field that requires continuous learning. Educational resources such as blogs, research papers, and webinars are essential for staying ahead of the curve. Some platforms that offer valuable insights into the latest tools and trends include:

-

Simplilearn: Simplilearn offers articles and tutorials focused on the latest prompt engineering tools and techniques. Their resources are regularly updated, providing engineers with insights into the latest advancements in AI and how to improve prompt effectiveness.

-

Coursera and Udemy: These online learning platforms offer courses on natural language processing, AI, and machine learning, equipping engineers with the theoretical knowledge and practical skills needed for prompt engineering. Courses on these platforms often include real-world case studies and hands-on exercises, helping engineers refine their approaches to prompt design.

Numerous tools and resources are available to assist prompt engineers in optimizing their skills. From interactive platforms like OpenAI Playground to collaborative communities like Reddit, there are many opportunities for engineers to enhance their prompt-writing abilities. Leveraging these tools ensures that prompt engineers stay on the cutting edge, continually improving the quality and consistency of their outputs. By combining testing platforms, educational resources, and community collaboration, prompt engineers can effectively craft prompts that drive more precise and valuable AI interactions.

Conclusion

Prompt engineering is rapidly emerging as a critical skill in the ever-evolving landscape of AI. From defining clear objectives to refining outputs through iterative processes, the best practices outlined in this article offer a roadmap for maximizing AI potential. Whether you're creating content, analyzing data, or providing customer support, well-crafted prompts lead to more accurate, relevant, and valuable outcomes.

As AI continues to grow in capability and complexity, the ability to design precise, ethical, and effective prompts will be essential for ensuring responsible AI use. The advanced strategies discussed, including few-shot learning, prompt chaining, and contextual layering, offer experienced engineers ways to further optimize AI interactions in specialized fields. Meanwhile, ethical considerations, such as avoiding bias and ensuring transparency, are paramount in maintaining trust and fairness in AI systems.

Finally, the tools and resources available today provide invaluable support for testing, refining, and enhancing prompt design. By staying connected with collaborative learning communities, leveraging AI-powered platforms, and continually learning new techniques, professionals can remain at the forefront of this dynamic field.

In the end, mastering prompt engineering is not just about improving AI performance—it's about unlocking the full potential of AI to solve complex challenges, make informed decisions, and create meaningful experiences across industries. As AI evolves, so too will the art of prompt engineering, making it an essential competency for future-forward businesses and professionals.

References

- OpenAI Community: A guide to crafting effective prompts for diverse applications

- MIT Sloan: Effective prompts for AI

- McKinsey: What is prompt engineering?

- AWS: What is prompt engineering?

- IBM: Topics on prompt engineering

- Southwestern University: Effective writing assignments: Six parts of an effective prompt

- Forbes: The 9 crucial components of an effective prompt

- Georgetown University: AI prompts guide

- TechTarget: Compare prompt engineering tools

- Giselle: AI in Content Creation: Innovations, Challenges, and What’s Next

- Giselle: The Top 10 AI Text Generators in 2024

- Giselle: New Demands in LLM Monitoring: Keeping Large Language Models on Track

Please Note: This content was created with AI assistance. While we strive for accuracy, the information provided may not always be current or complete. We periodically update our articles, but recent developments may not be reflected immediately. This material is intended for general informational purposes and should not be considered as professional advice. We do not assume liability for any inaccuracies or omissions. For critical matters, please consult authoritative sources or relevant experts. We appreciate your understanding.