We're pleased to announce that Giselle now supports OpenAI's groundbreaking GPT-4.1 series, available as of today. Despite GPT-4.1 being released just a short time ago, Our Giselle development team has already implemented integration, demonstrating our commitment to providing cutting-edge capabilities without delay. This new family of models is revolutionizing how we approach complex tasks and build intelligent AI agents. With significant improvements in coding, instruction following, and long-context understanding, the GPT-4.1 family—including GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano—offers unprecedented capabilities that will reshape AI workflows across industries.

GPT-4.1 Series: Beyond the Specs

The GPT-4.1 family represents more than just improved benchmarks—it's about enabling practical solutions to real development challenges. While the technical specifications are available on OpenAI's official site, what matters most is how these advancements translate to actual productivity gains for developers and businesses.

The tiered approach of the series—GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano—means that teams can now select the right balance of capability, speed, and cost for each specific task. Rather than a one-size-fits-all solution, this flexibility allows for strategic deployment of AI resources across different parts of your workflow.

What makes GPT-4.1 truly impressive is how it addresses the everyday pain points developers face when working with AI coding assistants. The model now understands code context more holistically, meaning it can help you navigate complex repositories without losing track of the relationships between components.

The improved code diff capabilities are particularly valuable in real-world scenarios—instead of generating entirely new files (and potentially introducing incompatibilities), the model can suggest targeted changes that integrate smoothly with your existing codebase. This means less time spent cleaning up AI-generated code and more time focusing on what matters.

For frontend work, the improvements are immediately visible in the quality of generated interfaces—more intuitive layouts, better accessibility considerations, and more thoughtful user experiences. But perhaps most importantly for teams working in production environments, GPT-4.1 shows much more restraint in its modifications, touching only what needs to be changed and respecting the existing architecture.

Superior Instruction Following

Perhaps the most critical improvement for AI agent builders is GPT-4.1's enhanced instruction following capabilities. The model follows complex instructions more reliably, improving by 10.5% on Scale's MultiChallenge benchmark compared to GPT-4o.

According to OpenAI's official announcement, this advancement allows AI agents to better maintain coherence throughout conversations, track information from earlier messages, and follow specific formatting requirements. The models excel in several key instruction categories:

- Format following (specifying custom formats like XML, YAML, Markdown)

- Negative instructions (behaviors to avoid)

- Ordered instructions (steps to follow in sequence)

- Content requirements (specific information to include)

- Ranking (ordering output in particular ways)

- Overconfidence handling (saying "I don't know" when appropriate)

For multi-turn interactions—essential for sophisticated AI agents—GPT-4.1 delivers substantially better performance. The models can remember instructions from several turns back in a conversation and continue to follow them accurately, a critical capability for complex workflows.

On the other hand, Giselle currently does not have persistent memory, which means it cannot retain prior instructions or context over long, conversational interactions. As a result, some use cases are limited. However, by linking nodes together, developers can flexibly control performance in various ways. While nothing is decided yet, we are exploring experiences that would offer memory-like functionality in the future.

Million-Token Context Window

All models in the GPT-4.1 family—including GPT-4.1 nano—can process up to 1 million tokens of context, an 8x increase from the previous 128,000 token limit. This marks the first time that even the smallest model in a family supports such extensive context.

This expanded context window enables AI agents to process the equivalent of more than 8 copies of the entire React codebase, opening new possibilities for handling large codebases or extensive documentation sets simultaneously.

What's more impressive is that GPT-4.1 maintains reliable attention across this entire million-token span. The models can accurately retrieve specific information regardless of its position in the context—beginning, middle, or end—even with the full million tokens. This was demonstrated with capabilities like processing large log files and identifying specific entries hidden deep within massive datasets.

For enterprise applications, this translates to better processing of legal documents, more thorough code analysis, and enhanced customer support capabilities that can reference extensive knowledge bases. The ability to maintain context over extremely long sequences makes these models particularly valuable for complex, document-intensive workflows.

GPT-4.1 in a Developer’s Toolbox

As someone who has been deeply immersed in AI coding tools recently, I'm particularly excited about GPT-4.1's arrival. My journey with AI-assisted coding started with simple autocompletions but quickly evolved into more complex use cases like refactoring legacy codebases and building new features from scratch. While previous models showed promise, they often fell short when handling larger projects or maintaining consistency across multiple files.

The enhanced code diff capabilities are especially promising for my workflow. Rather than having the AI generate entire files from scratch (which often introduces compatibility issues), GPT-4.1's improved ability to produce precise diffs means I can integrate AI suggestions more seamlessly into existing codebases. This alone could transform my daily productivity by focusing AI assistance exactly where it's needed without disrupting established code.

I'm eagerly looking forward to exploring how GPT-4.1 nano's speed and efficiency might enhance my real-time coding experience, particularly for rapid prototyping sessions where latency matters more than ultimate sophistication. The fact that even this smallest model supports the full million-token context is frankly game-changing for developers like me who juggle complex projects with extensive documentation and interdependencies.

Giselle: Integration with GPT-4.1 Series

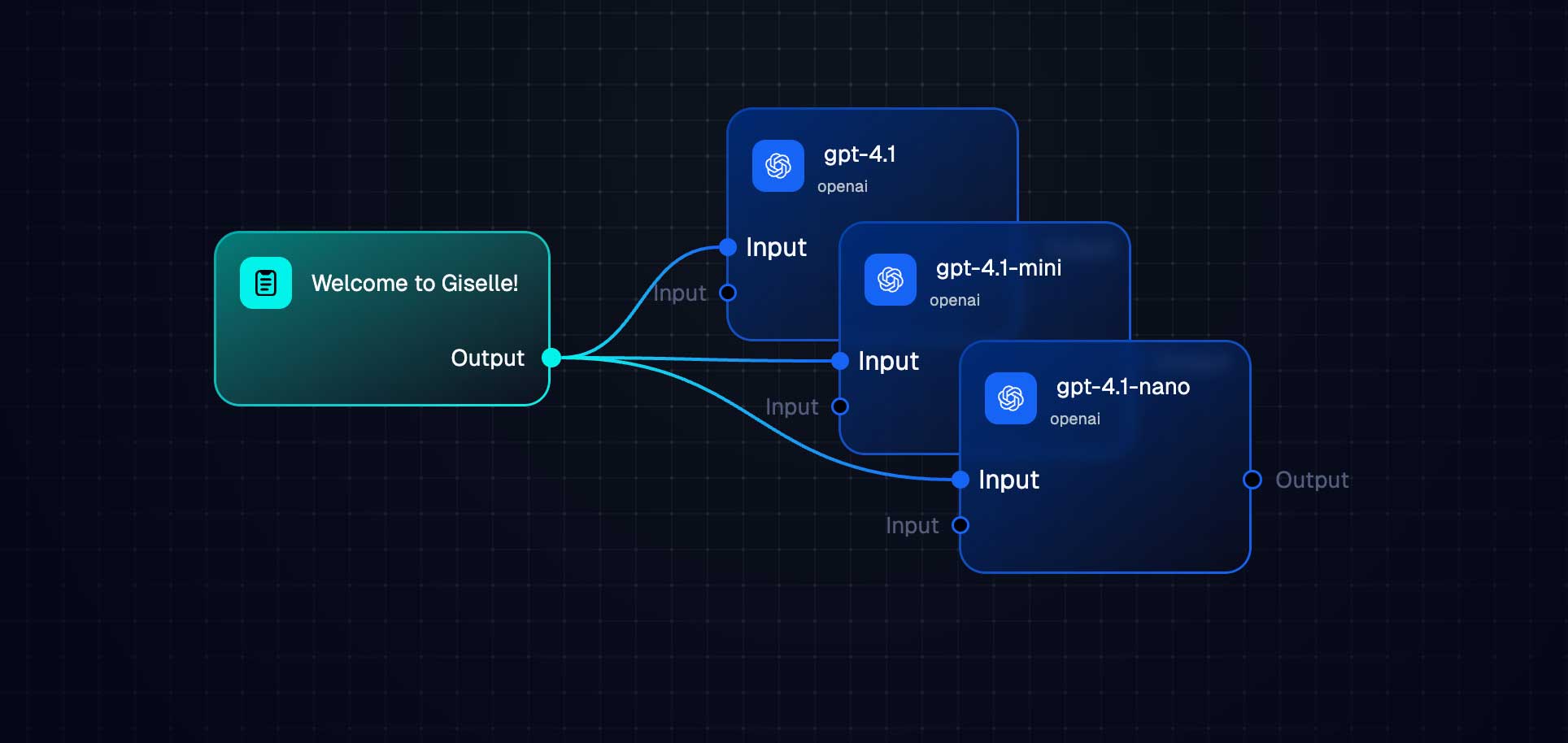

We're excited to announce that Giselle now fully supports the GPT-4.1 series, allowing users to harness these powerful models through our intuitive drag-and-drop, node-based interface. This integration enables teams to quickly build and deploy AI agents capable of tackling increasingly complex tasks with greater reliability.

Our team has already implemented support for all three models in the series:

- GPT-4.1: Ideal for complex reasoning, advanced coding tasks, and sophisticated multi-step workflows

- GPT-4.1 mini: Perfect for balanced performance and cost efficiency, with multimodal capabilities that Michelle from OpenAI noted "really punches above its weight"

- GPT-4.1 nano: Optimized for rapid, cost-effective operations like classification, autocompletion, and information extraction

This gives you unprecedented flexibility in balancing performance, speed, and cost based on your specific use case. Our engineering team completed the integration within a short time of the official API release, ensuring Giselle users have immediate access to these groundbreaking models.

As revealed in the GitHub pull request (#708 that added support for these models, we've implemented a comprehensive integration that maintains compatibility with existing workflows while unlocking the new capabilities.

I'll integrate these two sections for you. Here's how they come together:

Transforming AI Workflows with Enhanced Reliability

When Giselle's streamlined workflow tools connect with GPT-4.1's advanced capabilities, the results are transformative. Organizations can now build AI agents that excel at tasks previously challenging for AI, from complex code reviews to nuanced information synthesis.

A powerful example demonstrated during OpenAI's announcement was a document analysis application built with just a few lines of code. This application could process and analyze a NASA server log file containing over 450,000 tokens—a task impossible with previous models due to context limitations. With Giselle's node-based interface, similar capabilities can be deployed with even less technical overhead.

The improved instruction following means fewer instances of agents misinterpreting requests or losing track of complex workflows. These models excel at maintaining coherence across multi-turn conversations and following complex, detailed instructions—essential qualities for reliable AI agents.

Meanwhile, the expanded context window allows for processing larger documents and codebases without losing important information. As mentioned in OpenAI's announcement, the million-token context window can accommodate more than 8 complete copies of the entire React codebase, making it perfect for legal document analysis, extensive codebase reviews, or processing lengthy research papers.

For enterprises managing complex projects, this means AI agents that can maintain awareness across multiple documents, code files, and previous conversations, leading to more cohesive and intelligent assistance. Varun from Windsurf noted during the announcement that GPT-4.1 is "50% less verbose than other leading models," resulting in more focused and efficient interactions—a quality our enterprise clients particularly value.

The combination of Giselle's user-friendly interface and GPT-4.1's enhanced capabilities democratizes access to advanced AI agent technology. Teams can now build sophisticated AI workflows without extensive technical expertise, focusing on strategic objectives rather than implementation details.

These advancements represent not just incremental improvements but a significant leap forward in what's possible with AI agents. The improved instruction following abilities scale particularly well with long-context data, allowing agents to maintain consistent behavior even when processing large documents or complex codebases.

As we continue to refine our integration with the GPT-4.1 series, we're excited to see how our users will leverage these capabilities to build ever more sophisticated AI workflows. The improved performance opens up new possibilities for AI agent deployment across organizations of all sizes.

Availability and Future Vision

All three GPT-4.1 models are available immediately through the Giselle platform. To get started, simply log in to your Giselle account and select the appropriate GPT-4.1 model in your workflow configurations.

At Giselle, we're committed to continuously expanding our capabilities to meet the demands of the AI-driven development era. Our support for GPT-4.1 represents just one step in our broader vision to provide increasingly convenient and powerful product development experiences.

In the coming weeks, I'll be sharing detailed use cases and practical examples of how you can leverage the GPT-4.1 series with Giselle to transform your workflows. Rather than simply rehashing the technical specifications (which you can find on OpenAI's official documentation), I'll focus on real-world applications and step-by-step guides for implementing these powerful models in your projects. From building sophisticated code agents to creating intelligent document processing systems, we'll explore the practical possibilities that open up when Giselle's intuitive interface meets GPT-4.1's advanced capabilities.

The true potential of these models isn't in their benchmark scores but in how they solve real problems for developers and businesses. By integrating cutting-edge models like the GPT-4.1 series, we're enabling developers to focus on innovation rather than implementation details. As AI continues to evolve, Giselle will remain at the forefront, ensuring that our users have access to the most advanced tools available, wrapped in our intuitive, user-friendly interface.

We believe that the combination of powerful AI models and thoughtfully designed workflow tools will define the next generation of software development. With the integration of GPT-4.1, we're taking another significant stride toward that future—one where AI works alongside developers to turn ideas into reality more efficiently than ever before.