Google’s groundbreaking AI model, Gemini 2.0, is raising the bar for how we process information and interact in real time. Thanks to cutting-edge features like advanced reasoning, multimodal support, and long-context understanding, Gemini 2.0 brings powerful tools—such as Deep Research and the Multimodal Live API—that could genuinely reshape entire industries.

Gemini 2.0 Enhanced Multimodal Capabilities

The current wave of AI has moved beyond simply structuring and interpreting data. We’re now seeing the rise of more proactive, “agentic” AI models. These aren’t just reactive systems; they actually grasp the tasks at hand, plan ahead, and can even execute actions under human guidance. Leading the charge is Gemini 2.0, whose abilities surpass earlier, more passive AI solutions. By delivering unrivaled levels of automation and efficiency, it’s redefining how companies operate, make decisions, and manage day-to-day workflows. In essence, it’s more than just a new version—it’s a profound shift in AI’s role across the business landscape.

One standout aspect of Gemini 2.0 is its enhanced multimodal functionality, offering native support for images and audio. This allows the AI to process and interpret various data types in a way that feels closer to human cognition. For instance, it can watch a video clip, understand its context, and then pinpoint key insights—a task that used to demand a lot of manual effort. Whether you’re looking at video-driven customer interactions or optimizing supply chains, handling multiple data formats opens the door to more robust AI-powered solutions. By integrating these multimodal capabilities, Gemini 2.0 can produce more nuanced, well-rounded outcomes.

Another major leap comes from Gemini 2.0’s capacity for handling extended context. Complex tasks often require AI to remember what happened a few steps back, keeping a coherent understanding of lengthier interactions. Picture a customer service scenario: an AI agent with long-context awareness can recall a user’s purchase history, previous inquiries, and preferences, making the experience more personal and efficient. This spares customers from repeating themselves and bolsters overall satisfaction while trimming operational costs. Ultimately, the ability to store and leverage knowledge over time is a game-changer, letting AI tackle more intricate challenges than ever before. For deeper insight into Gemini’s features, feel free to check out this article.

Deep Research: The Future of Information Synthesis

At the core of Gemini 2.0 lies Deep Research, a feature specifically designed for high-volume, data-intensive tasks. By blending long-context processing and advanced reasoning, Deep Research acts almost like a virtual assistant—carrying out multi-step analyses and compiling comprehensive reports within minutes. It eliminates the grind of manually combing through giant datasets, freeing users to focus on strategic decisions.

For example, a graduate student exploring robotics trends might enter a broad query, and Deep Research would autonomously craft a plan, sift through web data, and deliver a structured report—complete with source links. Whether you’re an entrepreneur doing competitive analysis or a marketer looking to benchmark AI-driven campaigns, this functionality slashes research time and delivers actionable insights at a fraction of the usual effort.

Empowering Business Applications

Deep Research’s knack for spotting patterns, analyzing trends, and revealing market insights makes it indispensable across many fields:

- Startups: Competitive analysis, site selection, and more

- Enterprises: Assessing market trajectories and improving operational efficiency

- Academics: Streamlining research from data collection all the way to interpretation

Multimodal Live API: Redefining Interactive AI Experiences

Gemini 2.0’s Multimodal Live API unlocks real-time audio and video streaming, paving the way for highly interactive AI applications. Organizations can integrate this API into use cases like virtual assistants or mission-critical areas such as security, healthcare, and manufacturing.

Real-Time Multimodal Capabilities

By processing video, audio, and text in tandem, this API enables:

- Live video analysis for incident detection or sentiment monitoring

- Audio synthesis with multilingual, flexible text-to-speech outputs—ideal for customer support or media setups

- Integrated task execution using tool calls and multimodal inputs

Imagine a virtual meeting platform that offers live translation, detects overall sentiment, and summarizes tasks automatically—all of which can greatly improve teamwork and decision-making.

A Leap Forward in AI-Powered Innovation

Gemini 2.0 Flash goes beyond its predecessor, Gemini 1.5 Pro, bringing faster speeds and greater precision, as well as native multimodal outputs like image creation and adjustable speech synthesis. Combine that with built-in tools (e.g., Google Search and third-party APIs), and you get a level of flexibility and accuracy that’s hard to beat.

Future Implications

Launching Gemini 2.0 lays the foundation for truly agentic AI systems, where AI can plan, execute, and refine tasks with just light oversight from humans. Consider Jules, a coding assistant integrated into GitHub workflows, which shows how AI can automate repetitive development tasks and free engineers to focus on innovation.

In many ways, Gemini 2.0 marks a turning point in AI, revolutionizing how businesses, researchers, and developers leverage technology to maximize both productivity and creativity. Whether it’s simplifying complex research or boosting real-time interactions, Gemini 2.0 sets a new benchmark for intelligent, collaborative AI.

Giselle: Integration with Gemini 2.0

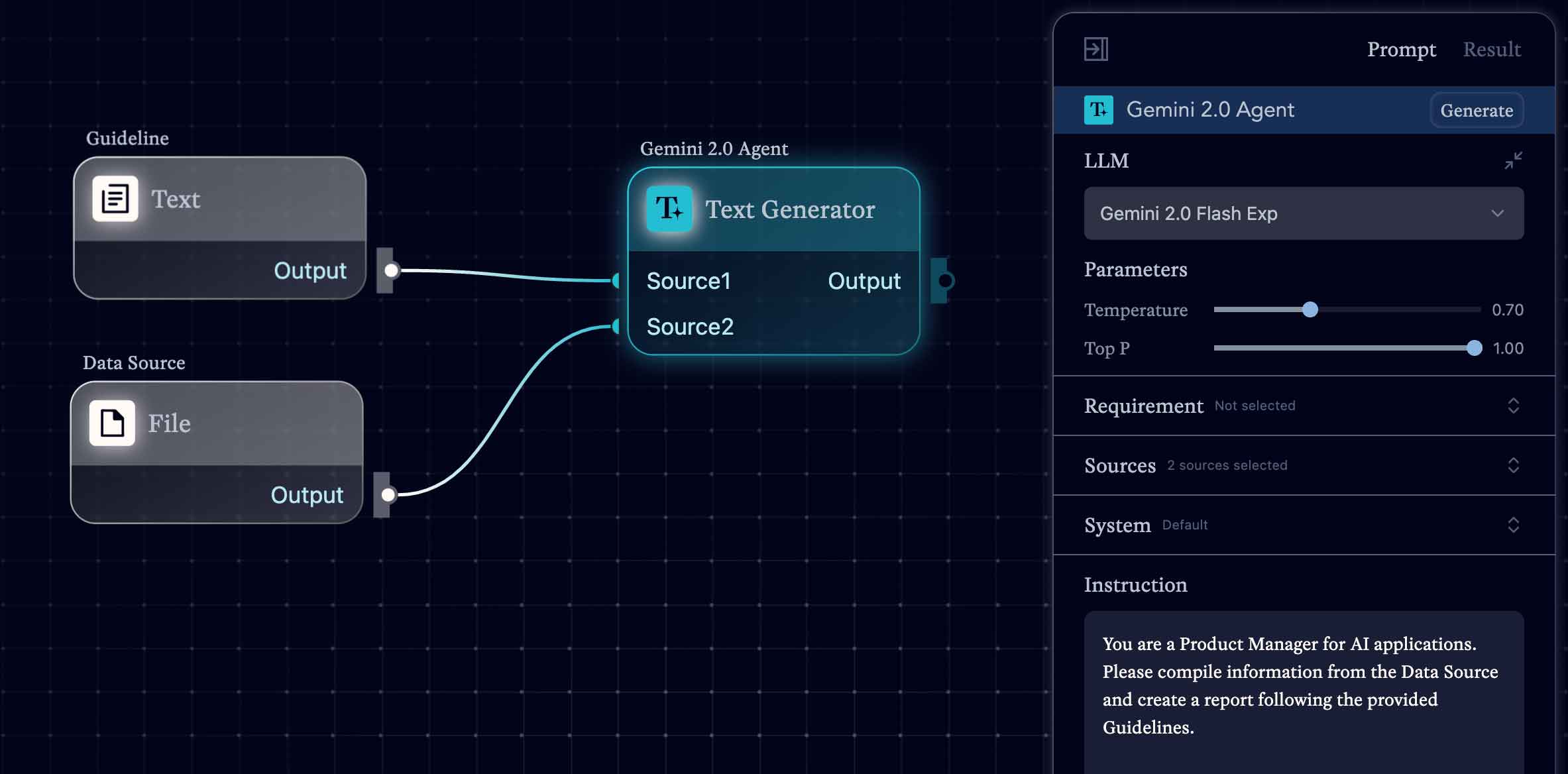

As companies scale up and strive for greater efficiency, AI-driven workflow automation has become vital. Enter Giselle, a platform with a drag-and-drop, node-based interface that lets you set up and manage sophisticated AI workflows. Whether the task is market research or code review, teams can quickly build AI agents to tackle all sorts of jobs, boosting collaboration and overall output.

When I first tried out Gemini 2.0 Flash Exp on Giselle, I found its text generation capabilities highly effective, quickly making it a useful part of my routine for tasks like analysis, research, and report drafting. Many colleagues have also praised its performance, calling it one of the most impressive LLMs they’ve encountered. While it’s already a powerful tool, I believe we’re just beginning to uncover its full potential, especially with the prospect of enhanced multimodal features on the horizon.

What truly sets Giselle apart, though, is how user-friendly it is. Even if you don’t have a technical background, you can drag and drop nodes to create workflows. It means you spend less time wrestling with the nuts and bolts and more time focusing on strategy. This combination of an accessible interface and a robust AI engine is exactly why Giselle resonates with so many types of organizations.

When Giselle’s node-based workflow tools meet Gemini 2.0’s advanced multimodal capabilities, the synergy is striking. Businesses can integrate Gemini 2.0’s impressive functionality right into their automated pipelines, building AI agents capable of more sophisticated tasks and deeper analysis. This doesn’t just help streamline operations—it can spark new opportunities and fuel innovation.

Automating Complex Tasks

Perhaps the biggest advantage of merging these technologies is their ability to create AI agents that can autonomously tackle complex tasks. By marrying top-tier AI with a streamlined automation setup, teams can hand off routine work to the AI and devote their attention to strategic or creative endeavors. In short, we’re moving past simple task automation and ushering in a new era of truly intelligent operations.

AI agents built with Gemini 2.0 and Giselle can manage everything from code reviews to market analysis and full-scale document generation. For instance, in a code review, the AI might spot changes, flag potential issues, and propose fixes. Meanwhile, a market analysis AI could pull data from multiple sources, interpret the findings, and turn them into actionable strategies for decision-makers. Such use cases highlight the real-world impact of combining a powerful AI with a purpose-built workflow platform.

This approach benefits organizations of all sizes. Giselle’s tools allow companies to streamline tasks like documentation or AI-driven collaboration—without the need for major staffing increases. As a result, you can scale more smoothly and stay competitive. Larger enterprises can also unify processes and data across different departments, simplifying large-scale projects and day-to-day operations.

That said, Giselle currently utilizes only Gemini 2.0’s text-generation features, so a fully multimodal experience isn’t yet available. We know that’s a limitation, and we’re actively working to roll out user-friendly multimodal features to maximize the platform’s potential down the line.

Scaling Productivity with AI Agents

As AI agents keep evolving, both in what they can do and how adaptable they are, they have the potential to dramatically increase productivity and spur innovation across industries. However, with greater capability comes the responsibility to ensure these tools are deployed ethically, transparently, and safely. The more advanced AI gets, the broader its impact on society—so strong governance, compliance, and ethical oversight are essential for sustainable development.

From a business standpoint, the perks go beyond merely automating tasks. AI agents can offer strategic insights, support collaboration, and help unify teams under a shared vision. But bringing these sophisticated technologies into your workflow also calls for solid guidelines and responsible usage policies—think AI-enabled red teaming to spot vulnerabilities. Without these measures, it’s hard to fully realize the potential of agentic AI.

Ultimately, as AI-driven workflows and automation continue to expand, we’re on the brink of a massive shift in how we work and do business. The real question is how we can integrate these cutting-edge tools in a way that is both responsible and imaginative, ensuring they lead to sustainable, long-term progress.