Today, Giselle officially adds OpenAI’s newest o-series models—o3 and o4-mini. We merged PR #729 just eight days after the 2025-04-16 launch—not quite as instant as we’d hoped, but we pushed hard to get them to you as quickly as possible.

Model-by-model highlights

| Item | o3 — deep-reasoning specialist | o4-mini — speed & cost champion |

|---|---|---|

| Ideal scenarios | Complex multimodal reasoning, in-depth analysis | High-volume traffic, low latency, budget-sensitive |

| Flagship benchmarks | Codeforces ELO 2706 / MMMU 82.9 % / SWE-bench 69.1 % | AIME 2025 92.7 % (no-tools), 99.5 % (Python) |

| Inference traits | Autonomously chains tools; excels at deep, multi-step workflows | Offers higher usage limits and better cost-efficiency than o3 while also improving benchmark accuracy (per OpenAI) |

| Input | Text + Image | Text + Image |

| Max context | 200 k tokens | 200 k tokens |

| Output cap | 100 k tokens | 100 k tokens |

| Cost (API) | $10 / M input tok $40 / M output tok |

$1.10 / M input tok $4.40 / M output tok |

Which one to choose?

- o3 – Pick when you need meticulous research reports, image-rich UX reviews, or cross-repo refactors where depth and stability trump everything.

- o4-mini – Deploy for chatbots, code-gen APIs, or any scenario where throughput and unit economics matter. It often outperforms o1-pro at lower cost, though task-specific validation is recommended. Both models support a 200 k-token context window—roughly 150 k words (≈ 1½ Linux kernels)—in a single prompt. With Reasoning Token Support, you may also pull the model’s thought trace around every tool call via the API.

Unchanged: why pair them with Giselle?

1. Access to High-Performance Mode

o-series models decide on the fly when to search the web, run code, or generate images. In Giselle, simply pass any text or image data to an OpenAI o3/o4-mini node and the heavy lifting is done for you. Enable Web Search and the agent automatically pulls in broader, more accurate information from the internet, letting it tackle even more sophisticated queries.

2. Deep, multimodal reasoning

Just upload a design mock-up or a whiteboard snapshot through a File-Upload node and pipe it into a Generation node. o3 / o4-mini will parse the image and can produce anything from a detailed execution plan to fully-generated code—all in one pass.

3. Long-form consistency

With a 200 k-token window, the agent can keep multiple legal contracts in context, answer clause-level questions, or refactor code that spans several repositories. Our Giselle team is actively enhancing GitHub integration, so expect documentation and analysis that draw on even larger code-bases in the near future.

Note: Not every official OpenAI API feature is yet surfaced inside Giselle.

Get started now

- Log in — use GitHub, Google, or email.

- Choose a plan — Pro or higher unlocks o3 and o4-mini.

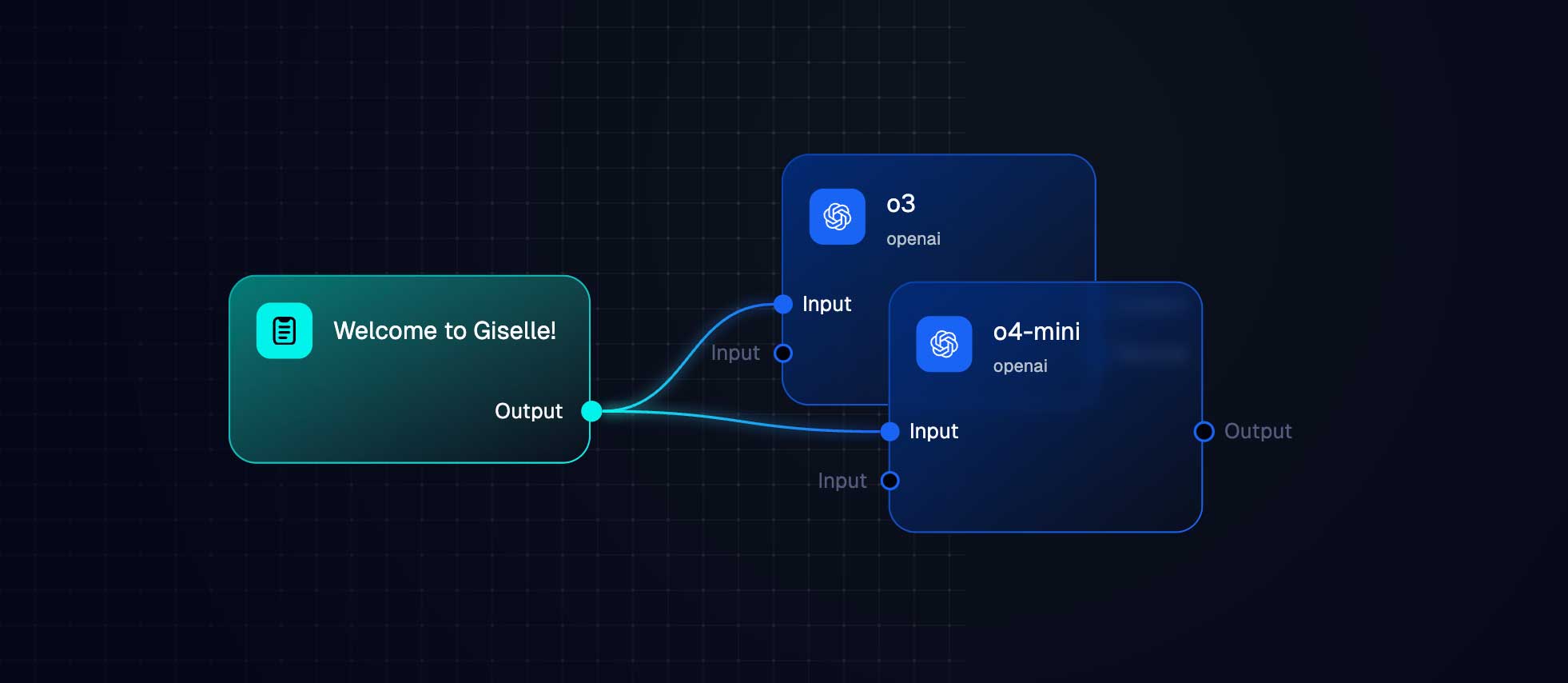

- Add a node — drag an OpenAI o3 or o4-mini Generation Node onto your canvas.

- Connect input sources — link images, PDFs, or datasets to the node.

- Write a prompt & run — the model invokes the necessary tools and returns results automatically.