The goal stays human

Designing for distance

Living with my dog pushed me to build a media site with distance — not louder, not faster, not more optimized than existing pet media.

The first decision was not what to build, but which decisions would remain human.

Worldview. Tone. Editorial granularity. These were never delegated to AI.

What I wanted from AI was not automation, but support for judgment — help in thinking clearly, not deciding for me.

The tools we used — and what each was responsible for

This project relied on many tools, but none of them were treated as general-purpose solutions. Each was given a narrow, explicit role.

Site foundation: vibe coding (with Giselle)

The site started through vibe coding — establishing the architectural baseline required for a media platform, before defining its final visual or editorial form.

The goal wasn't to skip architecture, but to let it emerge through iteration without stalling progress.

Giselle supported this phase not by generating code, but by holding context across iterations while things were still fluid.

Concept definition

The editorial concept was shaped using Claude Skills to define tone, constraints, and boundaries. Those definitions were then brought into Giselle and used for repeated sparring.

Not to generate content — but to question assumptions and sharpen decisions.

Site design (without Figma)

There were no Figma files. Design lived in my head first and was implemented directly through code.

Adobe tools were used only as modular parts — assets, not blueprints. The actual design process happened through implementation itself.

Expression

- Suno was used for playful, experimental sound-based expression

- Midjourney for visual exploration and mood validation

Neither was used to finalize decisions. They existed to widen expressive range, not to define it.

Infrastructure

Vercel handled what I didn't want to think about:

- Deployment

- Domains

- Preview URLs

- Analytics

- Database integration via Supabase

GitHub acted as the shared backbone throughout.

Review and evaluation

- Giselle was used for structural code review

- Gemini and other models were used to evaluate the site from the outside

Giselle was used to review the site from within the system — questioning whether the architecture made sense for a growing, SEO-driven media platform.

Other models were used to evaluate the site from the outside — as readers, critics, and search engines might.

What we actually did with Giselle

Giselle was used across the project, not to generate outputs, but to support decisions.

Here are three workflows that shaped how we worked.

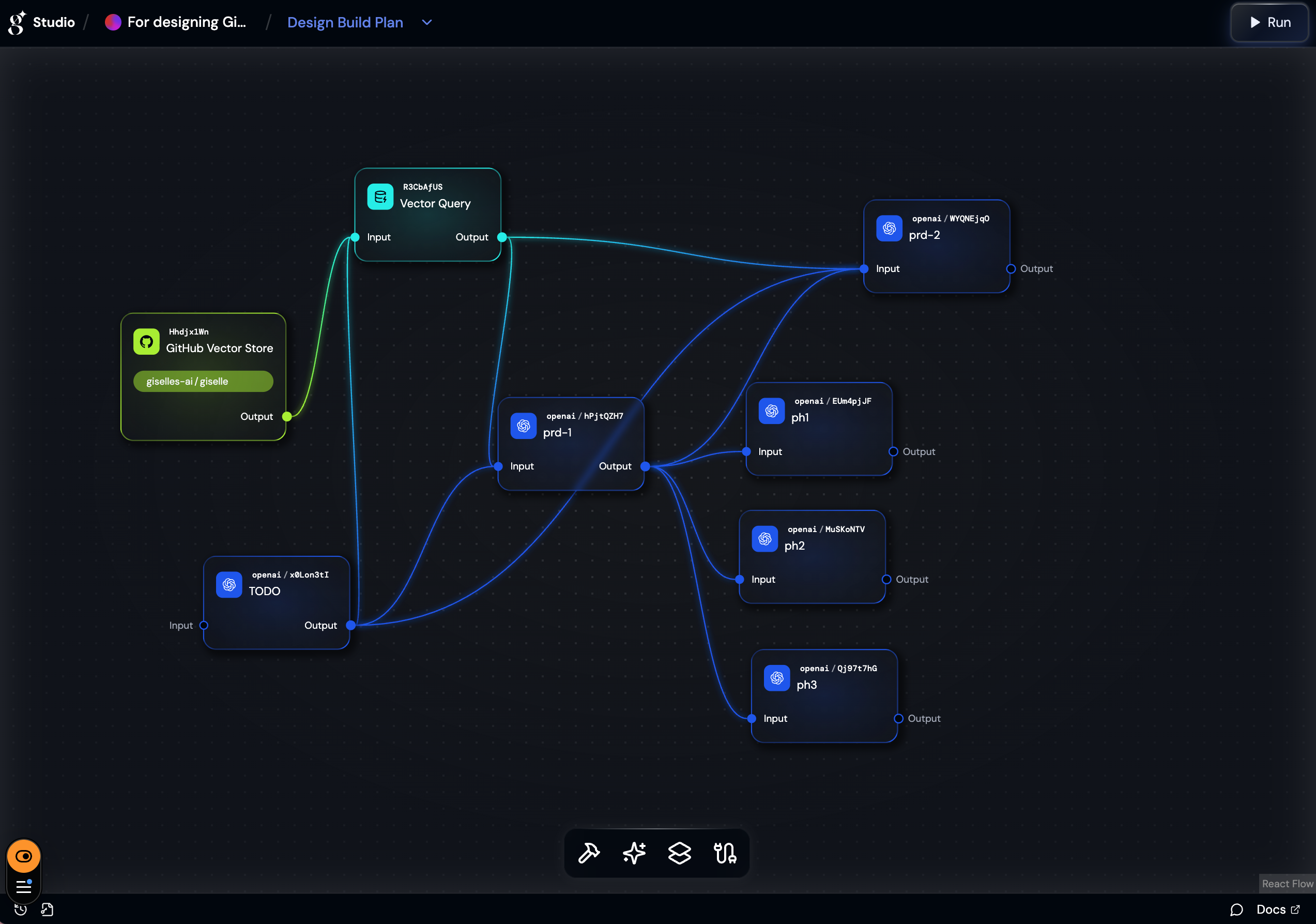

Structural code review

Giselle was asked to learn from existing repositories — how media platforms organize code, content, and SEO-driven structure. Based on those patterns, it proposed structural improvements without starting from scratch.

Editorial expansion

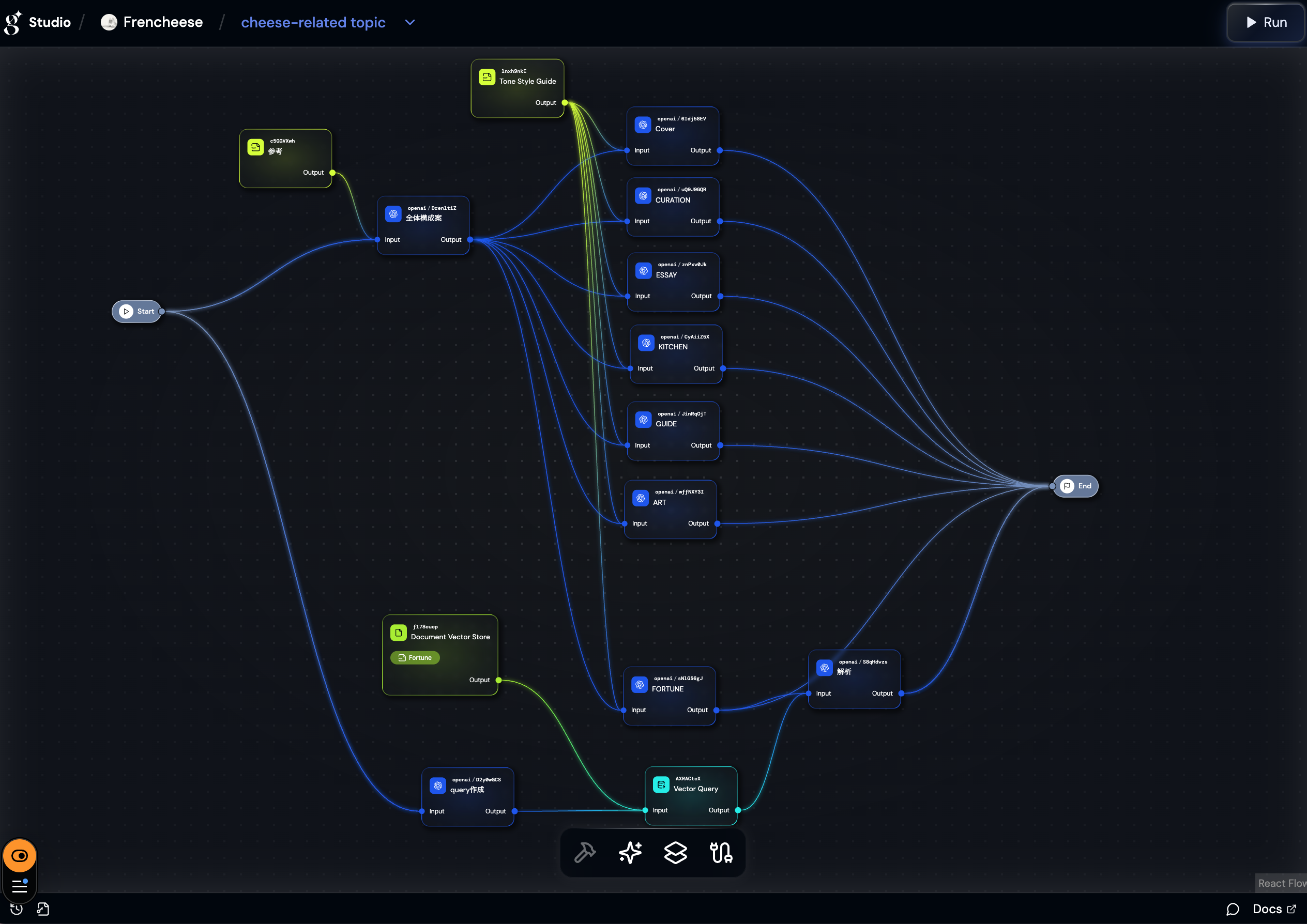

Giselle supported conceptual judgment. It was used to expand a monthly editorial theme into section-level inspiration across the media site.

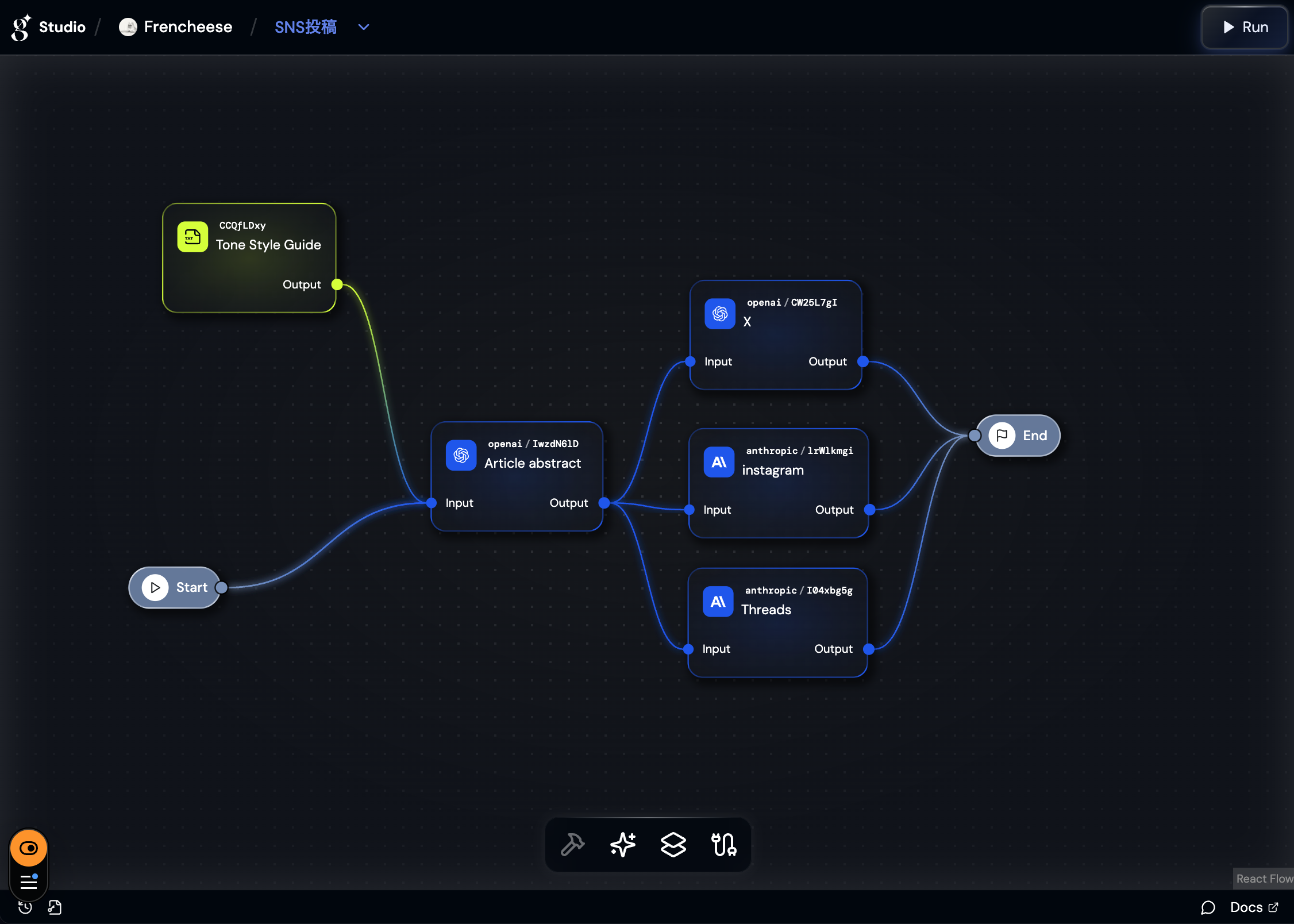

Operational workflows (not yet in use)

Some workflows were built for operational convenience — designed now, but waiting to be put into real use as the site matures.

Across all stages, Giselle wasn't asked to fix or produce. It was positioned where structure becomes judgment.

What other products handled instead

Execution, expression, and speed

Other tools played critical roles — just not at the decision layer.

-

v0 / Cursor → execution speed and implementation momentum

-

Claude / ChatGPT → localized thinking support and iteration

-

Midjourney / Suno → expressive range and creative exploration

-

Gemini → perspective disruption and critique

All of them operated below the decision layer.

They moved fast, explored widely, and challenged assumptions — but they did not decide direction.

What Giselle is actually good at

A layer above tools

Giselle is not a working AI. It is not a content generator. It is not a shortcut for decision-making.

Its strength lies elsewhere.

- Reading systems, not outputs

- Making structure explicit

- Holding repeated decisions in view

Giselle sat above execution and expression — a layer where human judgment could remain effective instead of being drowned out.

It didn't compete with other tools. It coordinated the space in which they made sense.

AI usage is a placement problem

This approach is not universal. If full automation is the goal, this is not the right model.

But if human judgment is non-negotiable, then the real question is not what AI can do.

It is where you place it.

Giselle wasn't a labor-saving device. It was infrastructure for judgment.

Most of all, it was simply fun to see ideas that had existed only in my head turn into something tangible.